cargo-nextest

Welcome to the home page for cargo-nextest, a next-generation test runner for Rust projects.

Features

- Clean, beautiful user interface. Nextest presents its results concisely so you can see which tests passed and failed at a glance.

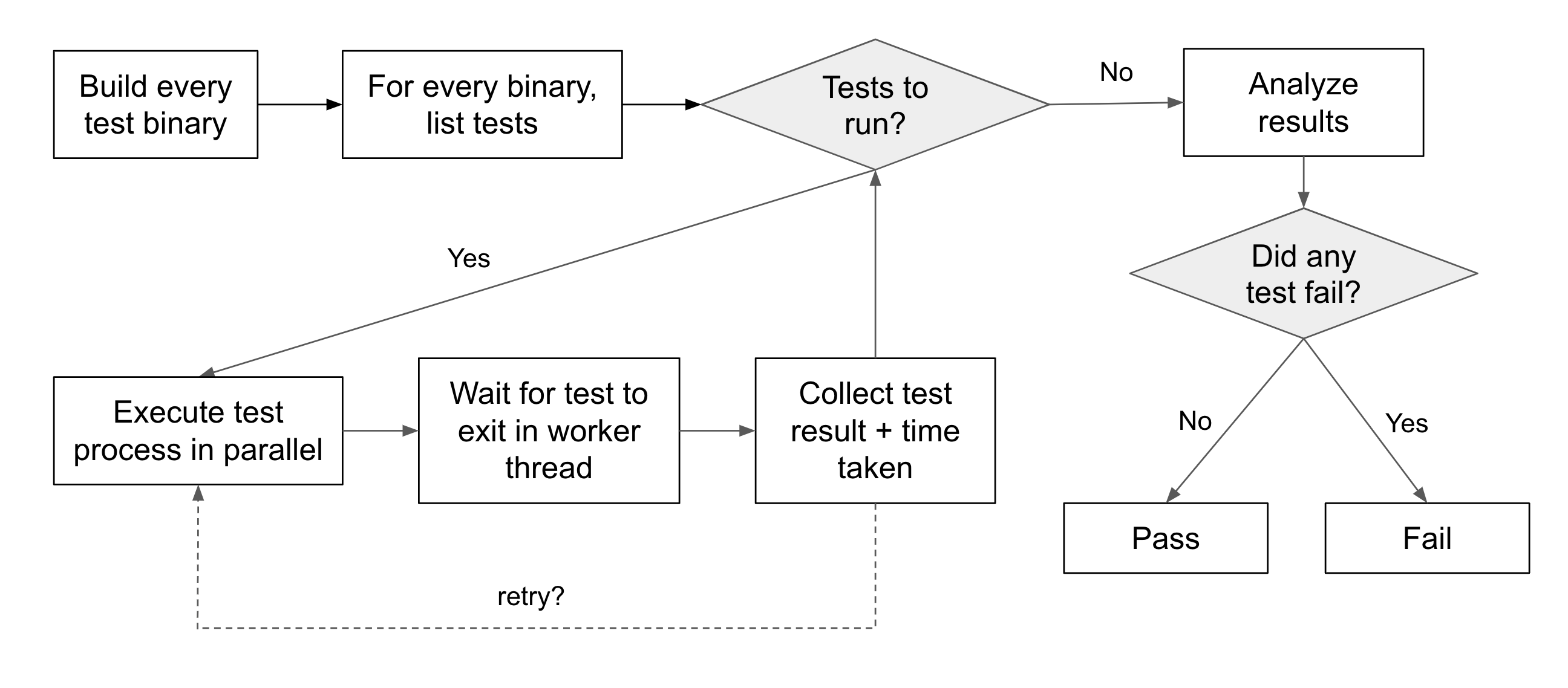

- Up to 3× as fast as cargo test. Nextest uses a state-of-the-art execution model for faster, more reliable test runs.

- Identify slow and leaky tests. Use nextest to detect misbehaving tests, identify bottlenecks during test execution, and optionally terminate tests if they take too long.

- Filter tests using an embedded language. Use powerful filter expressions to specify granular subsets of tests on the command-line, and to enable per-test overrides.

- Configure per-test overrides. Automatically retry subsets of tests, mark them as heavy, or run them serially.

- Designed for CI. Nextest addresses real-world pain points in continuous integration scenarios:

- Use pre-built binaries for quick installation.

- Set up CI-specific configuration profiles.

- Reuse builds and partition test runs across multiple CI jobs. (Check out this example on GitHub Actions).

- Automatically retry failing tests, and mark them as flaky if they pass later.

- Print failing output at the end of test runs.

- Output information about test runs as JUnit XML.

- Cross-platform. Nextest works on Linux and other Unixes, Mac and Windows, so you get the benefits of faster test runs no matter what platform you use.

- ... and more coming soon!

Quick start

Install cargo-nextest for your platform using the pre-built binaries.

Run all tests in a workspace:

cargo nextest run

For more detailed installation instructions, see Installation.

Note: Doctests are currently not supported because of limitations in stable Rust. For now, run doctests in a separate step with

cargo test --doc.

Crates in this project

| Crate | crates.io | rustdoc (latest version) | rustdoc (main) |

|---|---|---|---|

| cargo-nextest, the main test binary |  |  |  |

| nextest-runner, core nextest logic |  |  |  |

| nextest-metadata, parsers for machine-readable output |  |  |  |

| nextest-filtering, parser and evaluator for filter expressions |  |  |  |

| quick-junit, JUnit XML serializer |  |  |  |

| datatest-stable, custom test harness for data-driven tests |  |  |  |

| future-queue, run queued futures with global and group limits |  |  |  |

Contributing

The source code for nextest and this site are hosted on GitHub, at https://github.com/nextest-rs/nextest.

Contributions are welcome! Please see the CONTRIBUTING file for how to help out.

License

The source code for nextest is licensed under the MIT and Apache 2.0 licenses.

This document is licensed under CC BY 4.0. This means that you are welcome to share, adapt or modify this material as long as you give appropriate credit.

Installation and usage

cargo-nextest works on Linux and other Unix-like OSes, macOS, and Windows.

Installing pre-built binaries (recommended)

cargo-nextest is available as pre-built binaries. See Pre-built binaries for more information.

Installing from source

If pre-built binaries are not available for your platform, or you'd like to otherwise install cargo-nextest from source, see Installing from source for more information.

Windows antivirus and macOS Gatekeeper

For notes about platform-specific performance issues caused by anti-malware software on Windows and macOS, see Windows antivirus and macOS Gatekeeper.

Installing pre-built binaries

The quickest way to get going with nextest is to download a pre-built binary for your platform. The latest nextest release is available at:

- get.nexte.st/latest/linux for Linux x86_64, including Windows Subsystem for Linux (WSL)1

- get.nexte.st/latest/linux-arm for Linux aarch641

- get.nexte.st/latest/mac for macOS, both x86_64 and Apple Silicon

- get.nexte.st/latest/windows for Windows x86_64

Other platforms

Nextest's CI isn't run on these platforms -- these binaries most likely work but aren't guaranteed to do so.

- get.nexte.st/latest/linux-musl for Linux x86_64, with musl libc2

- get.nexte.st/latest/windows-x86 for Windows i686

- get.nexte.st/latest/freebsd for FreeBSD x86_64

- get.nexte.st/latest/illumos for illumos x86_64

These archives contain a single binary called cargo-nextest (cargo-nextest.exe on Windows). Add this binary to a location on your PATH.

The standard Linux binaries target glibc, and have a minimum requirement of glibc 2.27 (Ubuntu 18.04).

Rust targeting Linux with musl currently has a bug that Rust targeting Linux with glibc doesn't have. This bug means that nextest's linux-musl binary has slower test runs and is susceptible to signal-related races. Only use the linux-musl binary if the standard Linux binary doesn't work in your environment.

Downloading and installing from your terminal

The instructions below are suitable for both end users and CI. These links will stay stable.

Note: The instructions below assume that your Rust installation is managed via rustup. You can extract the archive to a different directory in your PATH if required.

If you'd like to stay on the 0.9 series to avoid breaking changes (see the stability policy for more), replace

latestin the URL with0.9.

Linux x86_64

curl -LsSf https://get.nexte.st/latest/linux | tar zxf - -C ${CARGO_HOME:-~/.cargo}/bin

Linux aarch64

curl -LsSf https://get.nexte.st/latest/linux-arm | tar zxf - -C ${CARGO_HOME:-~/.cargo}/bin

macOS (x86_64 and Apple Silicon)

curl -LsSf https://get.nexte.st/latest/mac | tar zxf - -C ${CARGO_HOME:-~/.cargo}/bin

This will download a universal binary that works on both Intel and Apple Silicon Macs.

Windows x86_64 using PowerShell

Run this in PowerShell:

$tmp = New-TemporaryFile | Rename-Item -NewName { $_ -replace 'tmp$', 'zip' } -PassThru

Invoke-WebRequest -OutFile $tmp https://get.nexte.st/latest/windows

$outputDir = if ($Env:CARGO_HOME) { Join-Path $Env:CARGO_HOME "bin" } else { "~/.cargo/bin" }

$tmp | Expand-Archive -DestinationPath $outputDir -Force

$tmp | Remove-Item

Windows x86_64 using Unix tools

If you have access to a Unix shell, curl and tar natively on Windows (for example if you're using shell: bash on GitHub Actions):

curl -LsSf https://get.nexte.st/latest/windows-tar | tar zxf - -C ${CARGO_HOME:-~/.cargo}/bin

Note: Windows Subsystem for Linux (WSL) users should follow the Linux x86_64 instructions.

If you're a Windows expert who can come up with a better way to do this, please add a suggestion to this issue!

Other platforms

FreeBSD x86_64

curl -LsSf https://get.nexte.st/latest/freebsd | tar zxf - -C ${CARGO_HOME:-~/.cargo}/bin

illumos x86_64

curl -LsSf https://get.nexte.st/latest/illumos | gunzip | tar xf - -C ${CARGO_HOME:-~/.cargo}/bin

As of 2022-12, the current version of illumos tar has a bug where tar zxf doesn't work over standard input.

Using cargo-binstall

If you have cargo-binstall available, you can install nextest with:

cargo binstall cargo-nextest --secure

Community-maintained binaries

These binaries are maintained by the community—thank you!

Homebrew

To install nextest with Homebrew, on macOS or Linux:

brew install cargo-nextest

Arch Linux

On Arch Linux, install nextest with pacman by running:

pacman -S cargo-nextest

Using pre-built binaries in CI

Pre-built binaries can be used in continuous integration to speed up test runs.

Using nextest in GitHub Actions

The easiest way to install nextest in GitHub Actions is to use the Install Development Tools action maintained by Taiki Endo.

To install the latest version of nextest, add this to your job after installing Rust and Cargo:

- uses: taiki-e/install-action@nextest

See this in practice with nextest's own CI.

The action will download pre-built binaries from the URL above and add them to .cargo/bin.

To install a version series or specific version, use this instead:

- uses: taiki-e/install-action@v2

with:

tool: nextest

## version (defaults to "latest") can be a series like 0.9:

# tool: nextest@0.9

## version can also be a specific version like 0.9.11:

# tool: nextest@0.9.11

Tip: GitHub Actions supports ANSI color codes. To get color support for nextest (and Cargo), add this to your workflow:

env: CARGO_TERM_COLOR: alwaysFor a full list of environment variables supported by nextest, see Environment variables.

Other CI systems

Install pre-built binaries on other CI systems by downloading and extracting the respective archives, using the commands above as a guide. See Release URLs for more about how to specify nextest versions and platforms.

If you've made it easy to install nextest on another CI system, feel free to submit a pull request with documentation.

Release URLs

Binary releases of cargo-nextest will always be available at https://get.nexte.st/{version}/{platform}.

{version} identifier

The {version} identifier is:

latestfor the latest release (not including pre-releases)- a version range, for example

0.9, for the latest release in the 0.9 series (not including pre-releases) - the exact version number, for example

0.9.4, for that specific version

{platform} identifier

The {platform} identifier is:

x86_64-unknown-linux-gnu.tar.gzfor x86_64 Linux (tar.gz)x86_64-unknown-linux-musl.tar.gzfor x86_64 Linux with musl (tar.gz, available for version 0.9.29+)aarch64-unknown-linux-gnu.tar.gzfor aarch64 Linux (tar.gz, available for version 0.9.29+)universal-apple-darwin.tar.gzfor x86_64 and arm64 macOS (tar.gz)x86_64-pc-windows-msvc.zipfor x86_64 Windows (zip)x86_64-pc-windows-msvc.tar.gzfor x86_64 Windows (tar.gz)i686-pc-windows-msvc.zipfor i686 Windows (zip)i686-pc-windows-msvc.tar.gzfor i686 Windows (tar.gz)x86_64-unknown-freebsd.tar.gzfor x86_64 FreeBSD (tar.gz)x86_64-unknown-illumos.tar.gzfor x86_64 illumos (tar.gz)

For convenience, the following shortcuts are defined:

linuxpoints tox86_64-unknown-linux-gnu.tar.gzlinux-muslpoints tox86_64-unknown-linux-musl.tar.gzlinux-armpoints toaarch64-unknown-linux-gnu.tar.gzmacpoints touniversal-apple-darwin.tar.gzwindowspoints tox86_64-pc-windows-msvc.zipwindows-tarpoints tox86_64-pc-windows-msvc.tar.gzwindows-x86points toi686-pc-windows-msvc.zipwindows-x86-tarpoints toi686-pc-windows-msvc.tar.gzfreebsdpoints tox86_64-unknown-freebsd.tar.gzillumospoints tox86_64-unknown-illumos.tar.gz

Also, each release's canonical GitHub Releases URL is available at https://get.nexte.st/{version}/release. For example, the latest GitHub release is available at get.nexte.st/latest/release.

Examples

The latest nextest release in the 0.9 series for macOS is available as a tar.gz file at get.nexte.st/0.9/mac.

Nextest version 0.9.11 for Windows is available as a zip file at get.nexte.st/0.9.11/windows, and as a tar.gz file at get.nexte.st/0.9.11/windows-tar.

Installing from source

If pre-built binaries are not available for your platform, or you'd otherwise like to install cargo-nextest from source, here's what you need to do:

Installing from crates.io

Run the following command:

cargo install cargo-nextest --locked

Note: A plain

cargo install cargo-nextestwithout--lockedis not supported. If you run into build issues, please try with--lockedbefore reporting an issue.

cargo nextest must be compiled and installed with Rust 1.74 or later (see Stability policy for more), but it can build and run

tests against any version of Rust.

Using a cached install in CI

Most CI users of nextest will benefit from using cached binaries. Consider using the pre-built binaries for this purpose.

See this example for how the nextest repository uses pre-built binaries..

If your CI is based on GitHub Actions, you may use the baptiste0928/cargo-install action to build cargo-nextest from source and cache the cargo-nextest binary.

jobs:

ci:

# ...

steps:

- uses: actions/checkout@v3

# Install a Rust toolchain here.

- name: Install cargo-nextest

uses: baptiste0928/cargo-install@v1

with:

crate: cargo-nextest

locked: true

# Uncomment the following line if you'd like to stay on the 0.9 series

# version: 0.9

# At this point, cargo-nextest will be available on your PATH

Also consider using the Swatinem/rust-cache action to make your builds faster.

Installing from GitHub

Install the latest, in-development version of cargo-nextest from the GitHub repository:

cargo install --git https://github.com/nextest-rs/nextest --bin cargo-nextest

Updating nextest

Starting version 0.9.19, cargo-nextest has update functionality built-in. Simply run cargo nextest self update to check for and perform updates.

The nextest updater downloads and installs the latest version of the cargo-nextest binary from get.nexte.st.

To request a specific version, run (e.g.) cargo nextest self update --version 0.9.19.

For older versions

If you're on cargo-nextest 0.9.18 or below, update by redownloading and reinstalling the binary following the instructions at Pre-built binaries.

For distributors

cargo-nextest 0.9.21 and above has a new default-no-update feature, which will contain all default features except for self-update. The recommended, forward-compatible way to build cargo-nextest is with --no-default-features --features default-no-update.

Windows antivirus and macOS Gatekeeper

This page covers common performance issues caused by anti-malware protections on Windows and macOS. These performance issues are not unique to nextest, but its execution model may exacerbate them.

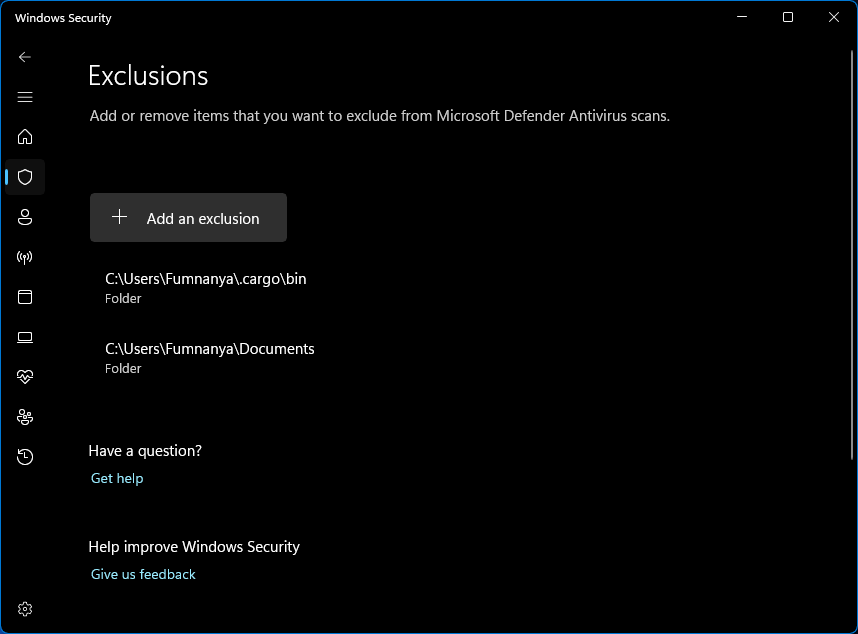

Windows

Your antivirus software—typically Windows Security, also known as Microsoft Defender—might interfere with process execution, making your test runs significantly slower. For optimal performance, exclude the following directories from checks:

- The directory with all your code in it

- Your

.cargo\bindirectory, typically within your home directory (see this Rust issue).

Here's how to exclude directories from Windows Security.

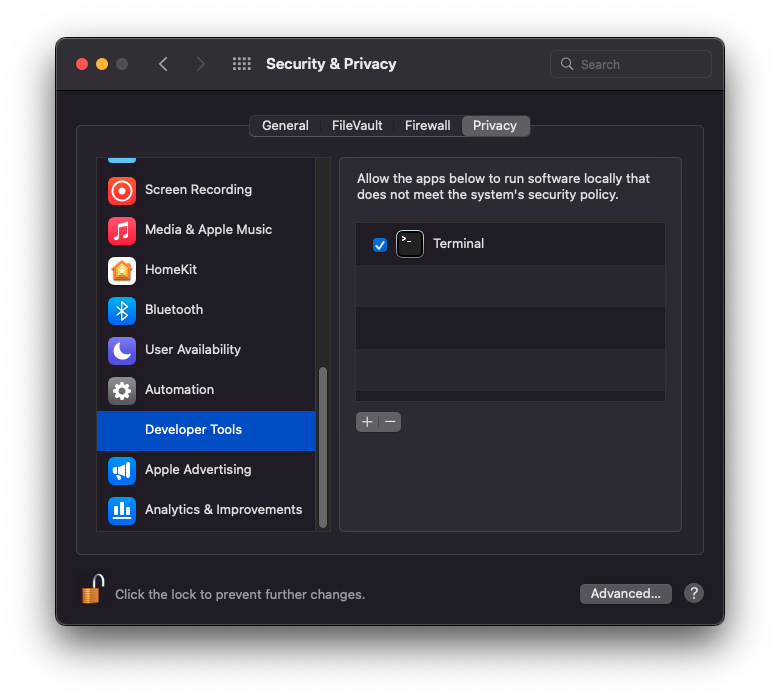

macOS

Similar to Windows Security, macOS has a system called Gatekeeper which performs checks on binaries. Gatekeeper can cause nextest runs to be significantly slower. A typical sign of this happening is even the simplest of tests in cargo nextest run taking more than 0.2 seconds.

Adding your terminal to Developer Tools will cause any processes run by it to be excluded from Gatekeeper. For optimal performance, add your terminal to Developer Tools. You may also need to run cargo clean afterwards.

How to add your terminal to Developer Tools

- Run

sudo spctl developer-mode enable-terminalin your terminal. - Go to System Preferences, and then to Security & Privacy.

- Under the Privacy tab, an item called

Developer Toolsshould be present. Navigate to it. - Ensure that your terminal is listed and enabled. If you're using a third-party terminal like iTerm, be sure to add it to the list (You may have to click the lock in the bottom-left corner and authenticate).

- Restart your terminal.

See this comment on Hacker News for more.

There are still some reports of performance issues on macOS after Developer Tools have been enabled. If you're seeing this, please add a note to this issue!

Usage

This section covers usage, features and options for cargo-nextest.

Basic usage

To build and run all tests in a workspace, cd into the workspace and run:

cargo nextest run

For more information about running tests, see Running tests.

Limitations

-

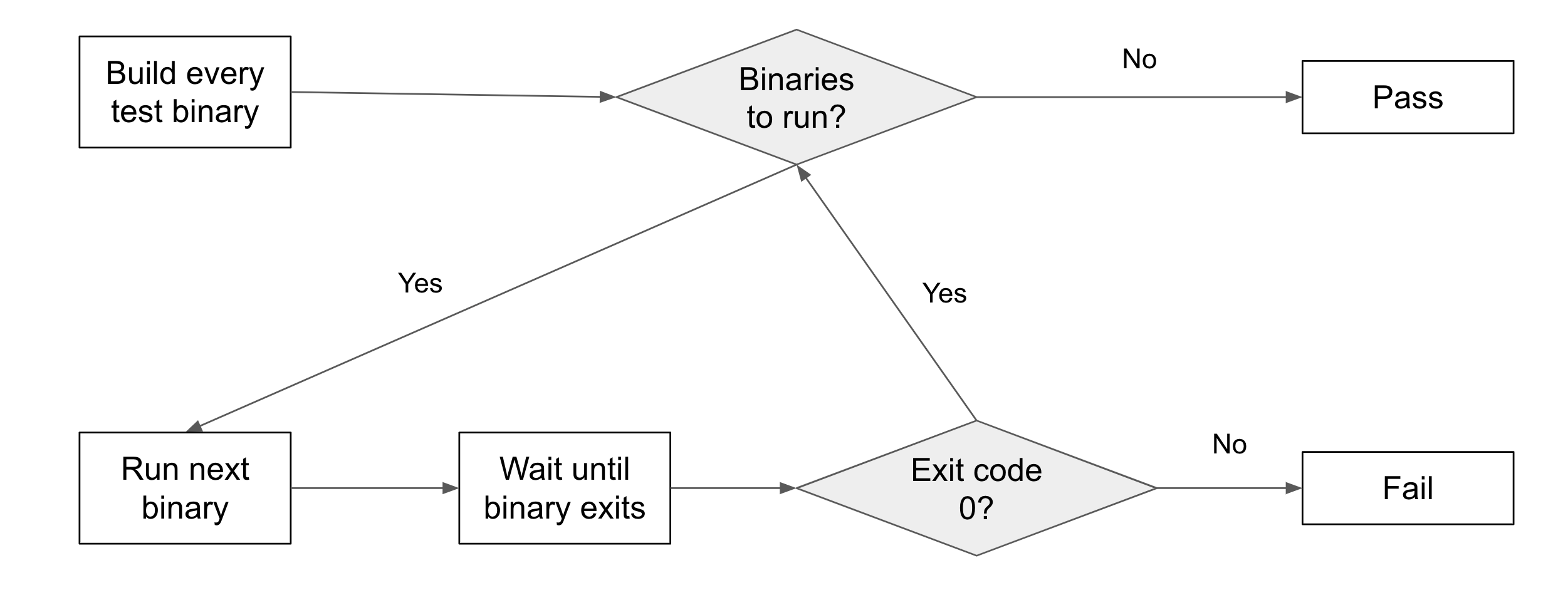

The nextest execution model means that each individual test is executed as a separate process. Tests that depend on being executed within the same process may not work correctly.

To work around this, consider combining those tests into one so that nextest runs them as a unit, or excluding those tests from nextest.

-

There's no way to mark a particular test binary as excluded from nextest.

-

Doctests are currently not supported because of limitations in stable Rust. Locally and in CI, after

cargo nextest run, usecargo test --docto run all doctests.

Running tests

To build and run all tests in a workspace1, cd into the workspace and run:

cargo nextest run

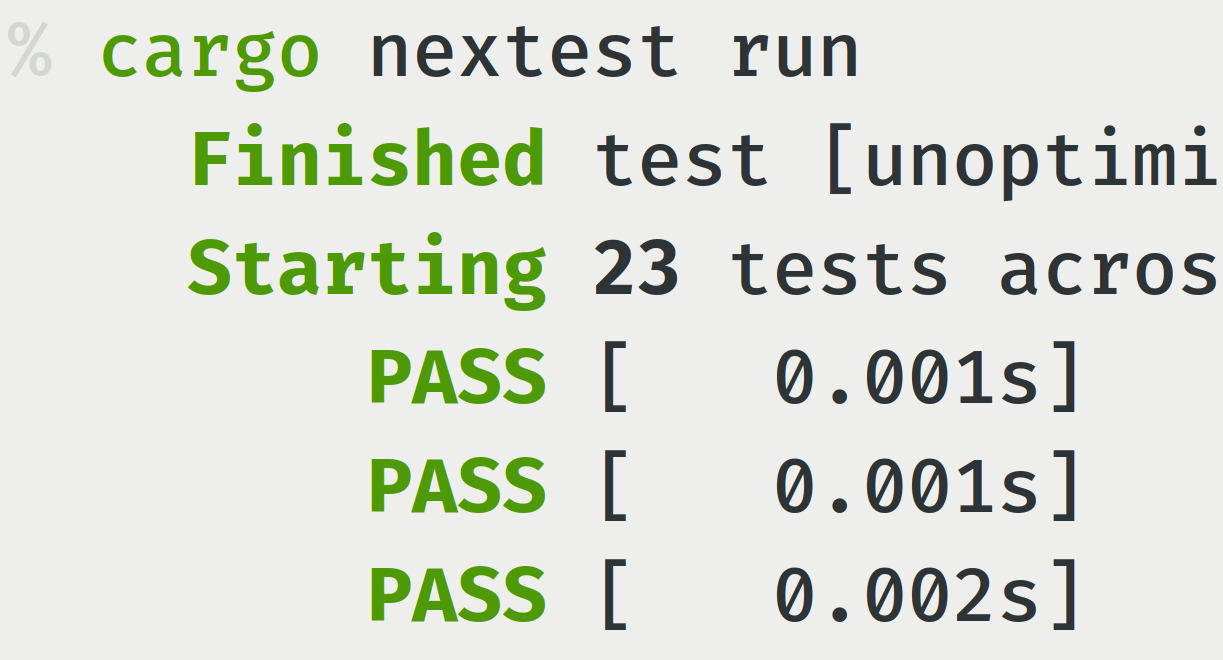

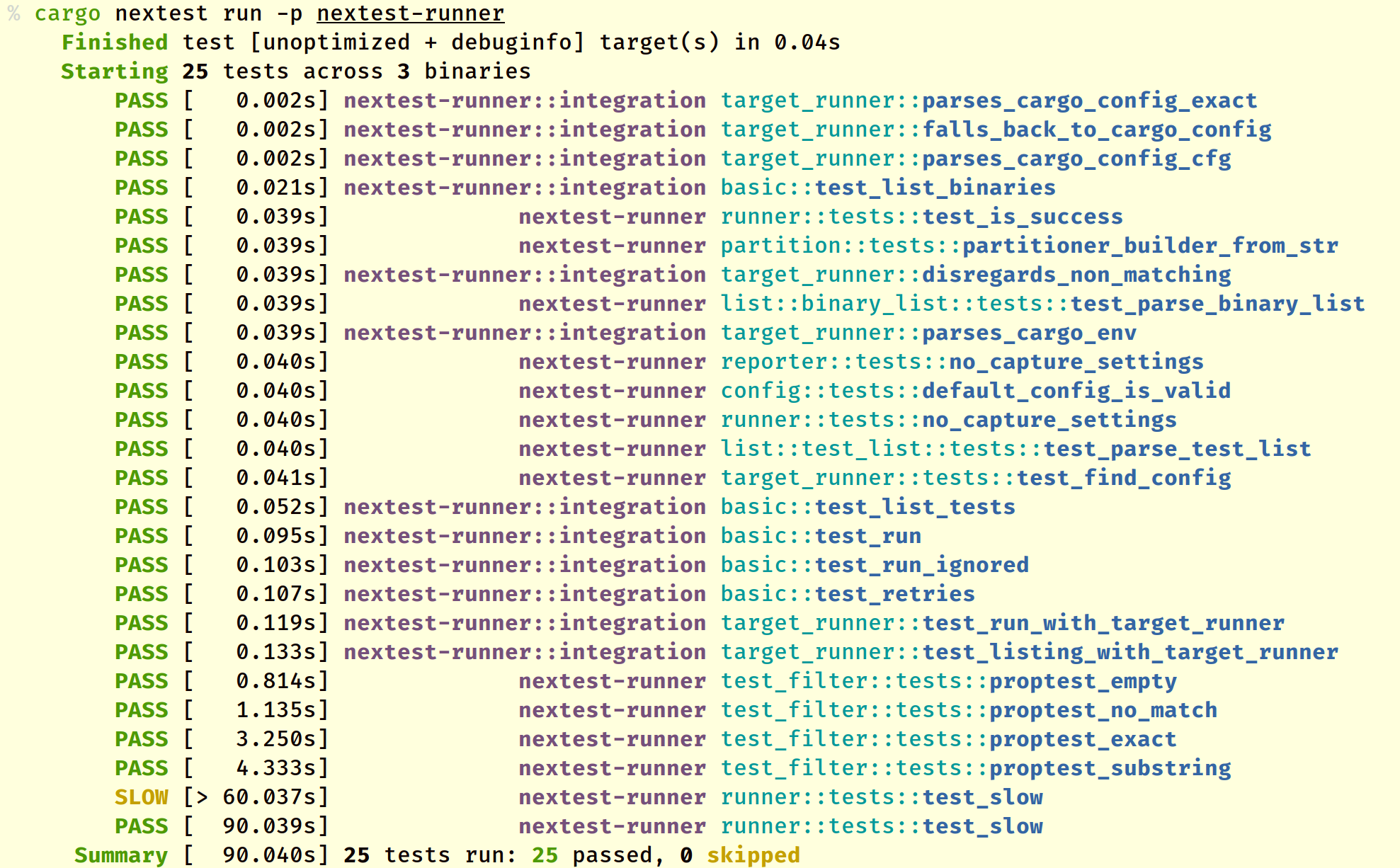

This will produce output that looks like:

In the output above:

-

Tests are marked

PASSorFAIL, and the amount of wall-clock time each test takes is listed within square brackets. In the example above,test_list_testspassed and took 0.052 seconds to execute. -

Tests that take more than a specified amount of time are marked SLOW. The timeout is 60 seconds by default, and can be changed through configuration.

-

The part of the test in purple is the binary ID for a unit test binary (see Binary IDs below).

-

The part after the binary ID is the test name, including the module the test is in. The final part of the test name is highlighted in bold blue text.

cargo nextest run supports all the options that cargo test does. For example, to only execute tests for a package called my-package:

cargo nextest run -p my-package

For a full list of options accepted by cargo nextest run, see cargo nextest run --help.

Binary IDs

A test binary can be any of:

- A unit test binary built from tests within

lib.rsor its submodules. The binary ID for these are shown by nextest as just the crate name, without a::separator inside them. - An integration test binary built from tests in the

[[test]]section ofCargo.toml(typically tests in thetestsdirectory.) The binary ID for these is has the formatcrate-name::bin-name. - Some other kind of test binary, such as a benchmark. In this case, the binary ID is

crate-name::kind/bin-name. For example,nextest-runner::bench/my-benchorquick-junit::example/show-junit.

For more about unit and integration tests, see the documentation for cargo test.

Filtering tests

To only run tests that match certain names:

cargo nextest run <test-name1> <test-name2>...

--skip and --exact

Nextest does not support --skip and --exact directly; instead, it supports more powerful filter expressions which supersede these options.

Here are some examples:

| Cargo test command | Nextest command |

|---|---|

cargo test -- --skip skip1 --skip skip2 test3 | cargo nextest run -E 'test(test3) - test(/skip[12]/)' |

cargo test -- --exact test1 test2 | cargo nextest run -E 'test(=test1) + test(=test2)' |

Filtering by build platform

While cross-compiling code, some tests (e.g. proc-macro tests) may need to be run on the host platform. To filter tests based on the build platform they're for, nextest's filter expressions accept the platform() set with values target and host.

For example, to only run tests for the host platform:

cargo nextest run -E 'platform(host)'

Displaying live test output

By default, cargo nextest run will capture test output and only display it on failure. If you do not want to capture test output:

cargo nextest run --no-capture

In this mode, cargo-nextest will run tests serially so that output from different tests isn't interspersed. This is different from cargo test -- --nocapture, which will run tests in parallel.

Doctests are currently not supported because of limitations in stable Rust. For now, run doctests in a separate step with cargo test --doc.

Options and arguments

Build and run tests

Usage: cargo nextest run [OPTIONS] [FILTERS]... [-- <TEST-BINARY-ARGS>...]

Arguments:

[FILTERS]... Test name filter

[TEST-BINARY-ARGS]... Emulated cargo test binary arguments (partially supported)

Options:

-P, --profile <PROFILE> Nextest profile to use [env: NEXTEST_PROFILE=]

-v, --verbose Verbose output [env: NEXTEST_VERBOSE=]

--color <WHEN> Produce color output: auto, always, never [env: CARGO_TERM_COLOR=] [default: auto]

-h, --help Print help (see more with '--help')

Package selection:

-p, --package <PACKAGES> Package to test

--workspace Test all packages in the workspace

--exclude <EXCLUDE> Exclude packages from the test

--all Alias for --workspace (deprecated)

Target selection:

--lib Test only this package's library unit tests

--bin <BIN> Test only the specified binary

--bins Test all binaries

--example <EXAMPLE> Test only the specified example

--examples Test all examples

--test <TEST> Test only the specified test target

--tests Test all targets

--bench <BENCH> Test only the specified bench target

--benches Test all benches

--all-targets Test all targets

Feature selection:

-F, --features <FEATURES> Space or comma separated list of features to activate

--all-features Activate all available features

--no-default-features Do not activate the `default` feature

Compilation options:

--build-jobs <N> Number of build jobs to run

-r, --release Build artifacts in release mode, with optimizations

--cargo-profile <NAME> Build artifacts with the specified Cargo profile

--target <TRIPLE> Build for the target triple

--target-dir <DIR> Directory for all generated artifacts

--unit-graph Output build graph in JSON (unstable)

--timings[=<FMTS>] Timing output formats (unstable) (comma separated): html, json

Manifest options:

--manifest-path <PATH> Path to Cargo.toml

--frozen Require Cargo.lock and cache are up to date

--locked Require Cargo.lock is up to date

--offline Run without accessing the network

Other Cargo options:

--cargo-quiet Do not print cargo log messages

--cargo-verbose... Use cargo verbose output (specify twice for very verbose/build.rs output)

--ignore-rust-version Ignore `rust-version` specification in packages

--future-incompat-report Outputs a future incompatibility report at the end of the build

--config <KEY=VALUE> Override a configuration value

-Z <FLAG> Unstable (nightly-only) flags to Cargo, see 'cargo -Z help' for details

Filter options:

--run-ignored <WHICH> Run ignored tests [possible values: default, ignored-only, all]

--partition <PARTITION> Test partition, e.g. hash:1/2 or count:2/3

-E, --filter-expr <EXPR> Test filter expression (see

<https://nexte.st/book/filter-expressions>)

Runner options:

--no-run Compile, but don't run tests

-j, --test-threads <N> Number of tests to run simultaneously [possible values: integer or "num-cpus"] [default: from profile] [env: NEXTEST_TEST_THREADS=] [aliases: jobs]

--retries <N> Number of retries for failing tests [default: from profile] [env: NEXTEST_RETRIES=]

--fail-fast Cancel test run on the first failure

--no-fail-fast Run all tests regardless of failure

--no-capture Run tests serially and do not capture output

Reporter options:

--failure-output <WHEN> Output stdout and stderr on failure [env: NEXTEST_FAILURE_OUTPUT=] [possible values: immediate, immediate-final, final, never]

--success-output <WHEN> Output stdout and stderr on success [env: NEXTEST_SUCCESS_OUTPUT=] [possible values: immediate, immediate-final, final, never]

--status-level <LEVEL> Test statuses to output [env: NEXTEST_STATUS_LEVEL=] [possible values: none, fail, retry, slow, leak, pass, skip, all]

--final-status-level <LEVEL> Test statuses to output at the end of the run [env: NEXTEST_FINAL_STATUS_LEVEL=] [possible values: none, fail, flaky, slow, skip, pass, all]

--hide-progress-bar Do not display the progress bar [env: NEXTEST_HIDE_PROGRESS_BAR=]

Reuse build options:

--archive-file <PATH> Path to nextest archive

--archive-format <FORMAT> Archive format [default: auto] [possible values: auto, tar-zst]

--extract-to <DIR> Destination directory to extract archive to [default: temporary directory]

--extract-overwrite Overwrite files in destination directory while extracting archive

--persist-extract-tempdir Persist extracted temporary directory

--cargo-metadata <PATH> Path to cargo metadata JSON

--workspace-remap <PATH> Remapping for the workspace root

--binaries-metadata <PATH> Path to binaries-metadata JSON

--target-dir-remap <PATH> Remapping for the target directory

Config options:

--config-file <PATH>

Config file [default: workspace-root/.config/nextest.toml]

--tool-config-file <TOOL:ABS_PATH>

Tool-specific config files

--override-version-check

Override checks for the minimum version defined in nextest's config

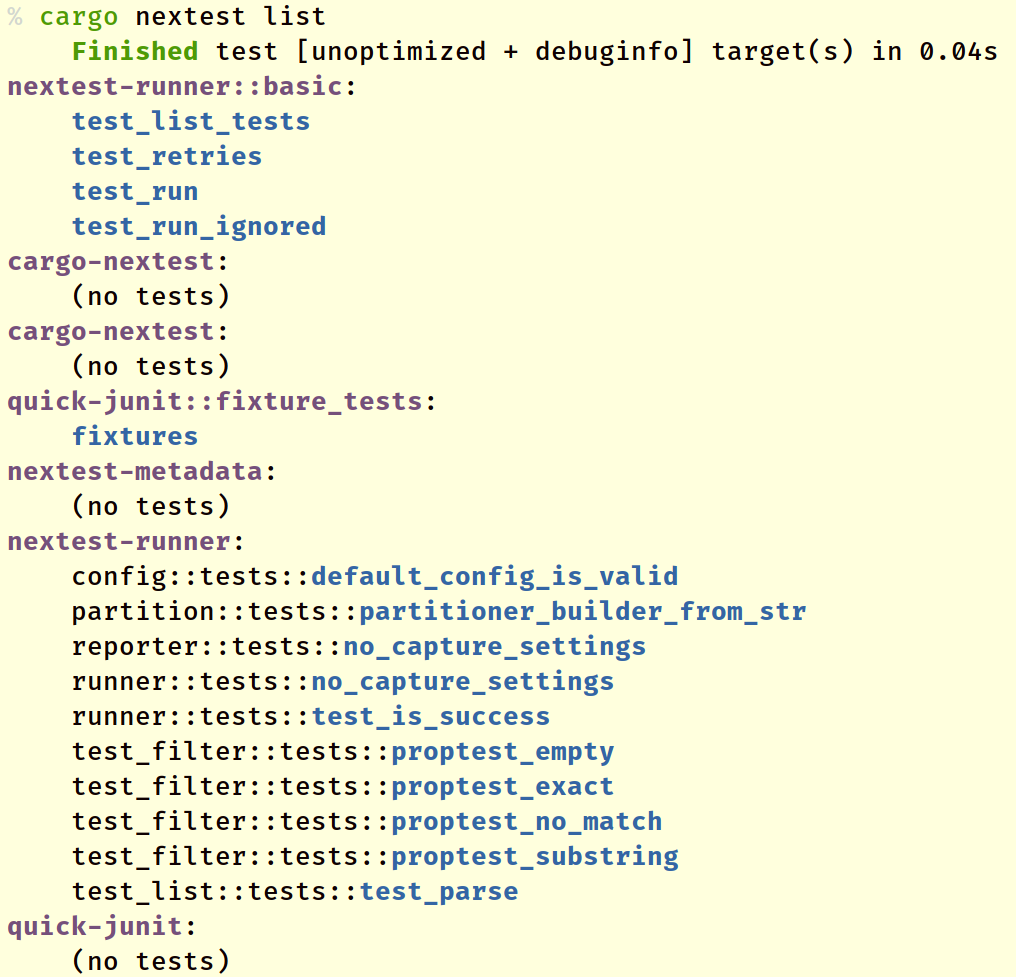

Listing tests

To build and list all tests in a workspace1, cd into the workspace and run:

cargo nextest list

cargo nextest list takes most of the same options that cargo nextest run takes. For a full list of options accepted, see cargo nextest list --help.

Doctests are currently not supported because of limitations in stable Rust. For now, run doctests in a separate step with cargo test --doc.

Options and arguments

List tests in workspace

Usage: cargo nextest list [OPTIONS] [FILTERS]... [-- <TEST-BINARY-ARGS>...]

Arguments:

[FILTERS]... Test name filter

[TEST-BINARY-ARGS]... Emulated cargo test binary arguments (partially supported)

Options:

-v, --verbose Verbose output [env: NEXTEST_VERBOSE=]

--color <WHEN> Produce color output: auto, always, never [env: CARGO_TERM_COLOR=] [default: auto]

-h, --help Print help (see more with '--help')

Package selection:

-p, --package <PACKAGES> Package to test

--workspace Test all packages in the workspace

--exclude <EXCLUDE> Exclude packages from the test

--all Alias for --workspace (deprecated)

Target selection:

--lib Test only this package's library unit tests

--bin <BIN> Test only the specified binary

--bins Test all binaries

--example <EXAMPLE> Test only the specified example

--examples Test all examples

--test <TEST> Test only the specified test target

--tests Test all targets

--bench <BENCH> Test only the specified bench target

--benches Test all benches

--all-targets Test all targets

Feature selection:

-F, --features <FEATURES> Space or comma separated list of features to activate

--all-features Activate all available features

--no-default-features Do not activate the `default` feature

Compilation options:

--build-jobs <N> Number of build jobs to run

-r, --release Build artifacts in release mode, with optimizations

--cargo-profile <NAME> Build artifacts with the specified Cargo profile

--target <TRIPLE> Build for the target triple

--target-dir <DIR> Directory for all generated artifacts

--unit-graph Output build graph in JSON (unstable)

--timings[=<FMTS>] Timing output formats (unstable) (comma separated): html, json

Manifest options:

--manifest-path <PATH> Path to Cargo.toml

--frozen Require Cargo.lock and cache are up to date

--locked Require Cargo.lock is up to date

--offline Run without accessing the network

Other Cargo options:

--cargo-quiet Do not print cargo log messages

--cargo-verbose... Use cargo verbose output (specify twice for very verbose/build.rs output)

--ignore-rust-version Ignore `rust-version` specification in packages

--future-incompat-report Outputs a future incompatibility report at the end of the build

--config <KEY=VALUE> Override a configuration value

-Z <FLAG> Unstable (nightly-only) flags to Cargo, see 'cargo -Z help' for details

Filter options:

--run-ignored <WHICH> Run ignored tests [possible values: default, ignored-only, all]

--partition <PARTITION> Test partition, e.g. hash:1/2 or count:2/3

-E, --filter-expr <EXPR> Test filter expression (see

<https://nexte.st/book/filter-expressions>)

Output options:

-T, --message-format <FMT> Output format [default: human] [possible values: human, json, json-pretty]

--list-type <TYPE> Type of listing [default: full] [possible values: full, binaries-only]

Reuse build options:

--archive-file <PATH> Path to nextest archive

--archive-format <FORMAT> Archive format [default: auto] [possible values: auto, tar-zst]

--extract-to <DIR> Destination directory to extract archive to [default: temporary directory]

--extract-overwrite Overwrite files in destination directory while extracting archive

--persist-extract-tempdir Persist extracted temporary directory

--cargo-metadata <PATH> Path to cargo metadata JSON

--workspace-remap <PATH> Remapping for the workspace root

--binaries-metadata <PATH> Path to binaries-metadata JSON

--target-dir-remap <PATH> Remapping for the target directory

Config options:

--config-file <PATH>

Config file [default: workspace-root/.config/nextest.toml]

--tool-config-file <TOOL:ABS_PATH>

Tool-specific config files

--override-version-check

Override checks for the minimum version defined in nextest's config

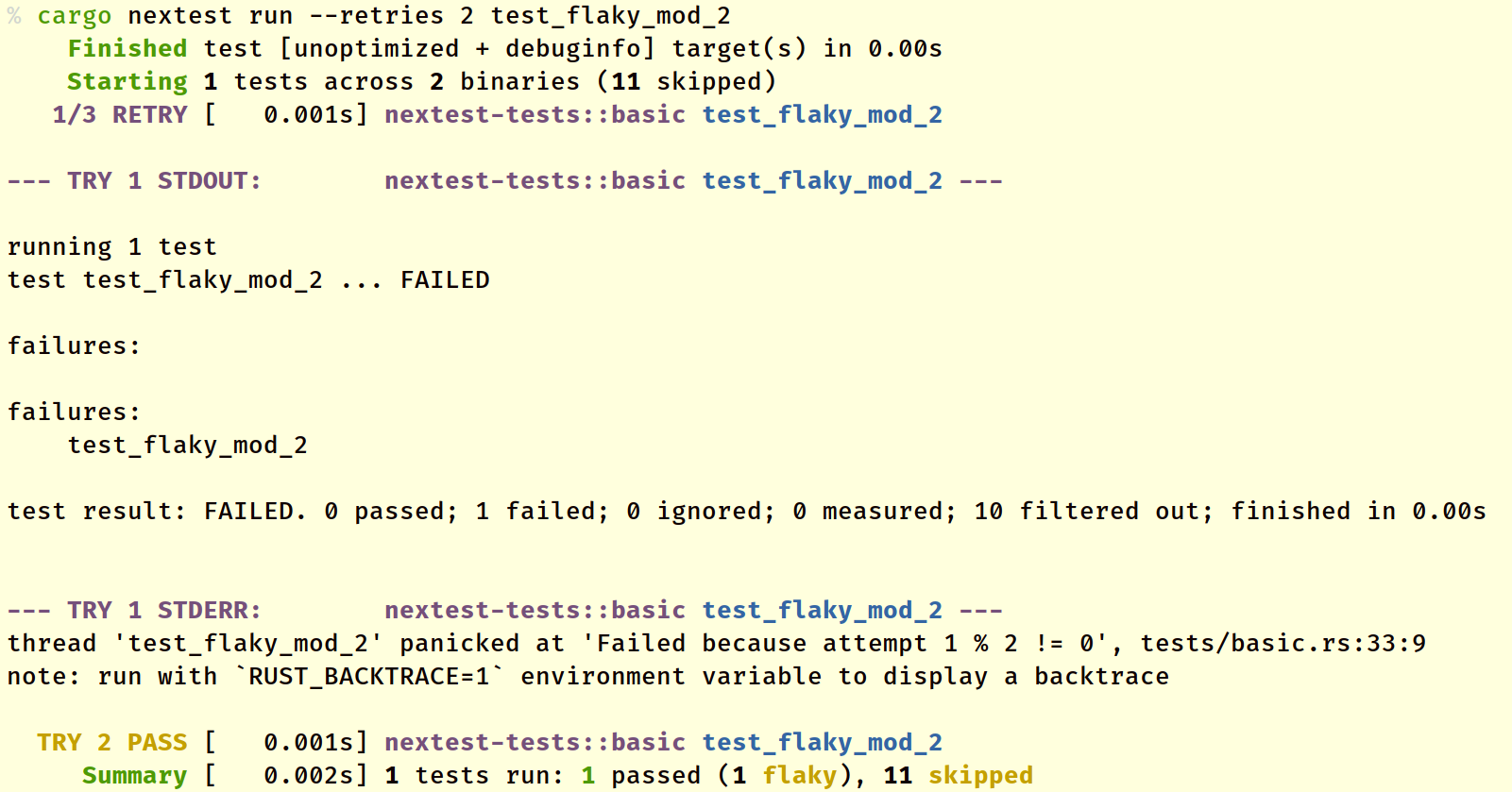

Retries and flaky tests

Sometimes, tests fail nondeterministically, which can be quite annoying to developers locally and in CI. cargo-nextest supports retrying failed tests with the --retries option. If a test succeeds during a retry, the test is marked flaky. Here's an example:

--retries 2 means that the test is retried twice, for a total of three attempts. In this case, the test fails on the first try but succeeds on the second try. The TRY 2 PASS text means that the test passed on the second try.

Flaky tests are treated as ultimately successful. If there are no other tests that failed, the exit code for the test run is 0.

Retries can also be:

- passed in via the environment variable

NEXTEST_RETRIES. - configured in

.config/nextest.toml.

For the order that configuration parameters are resolved in, see Hierarchical configuration.

Delays and backoff

In some situations, you may wish to add delays between retries—for example, if your test hits a network service which is rate limited.

In those cases, you can insert delays between test attempts with a backoff algorithm.

Note: Delays and backoff can only be specified through configuration. Passing in

--retriesvia the command line or specifying theNEXTEST_RETRIESenvironment variable will override delays and backoff specified through configuration.

Fixed backoff

To insert a constant delay between test attempts, use the fixed backoff algorithm. For example, to retry tests up to twice with a 1 second delay between attempts, use:

[profile.default]

retries = { backoff = "fixed", count = 2, delay = "1s" }

Exponential backoff

Nextest also supports exponential backoff, where the delay between attempts doubles each time. For example, to retry tests up to 3 times with successive delays of 5 seconds, 10 seconds, and 20 seconds, use:

[profile.default]

retries = { backoff = "exponential", count = 3, delay = "5s" }

A maximum delay can also be specified to avoid delays from becoming too large. In the above example, if count = 5, the fourth and fifth retries would be with delays of 40 seconds and 80 seconds, respectively. To clamp delays at 30 seconds, use:

[profile.default]

retries = { backoff = "exponential", count = 3, delay = "5s", max-delay = "30s" }

This effectively performs a truncated exponential backoff.

Adding jitter

To avoid thundering herd problems, it can be useful to add randomness to delays. To do so, use jitter = true.

[profile.default]

retries = { backoff = "exponential", count = 3, delay = "1s", jitter = true }

jitter = true also works for fixed backoff.

The current jitter algorithm picks a value in between 0.5 * delay and delay uniformly at random. This is not part of the stable interface and is subject to change.

Per-test overrides

Nextest supports per-test overrides for retries, letting you mark a subset of tests as needing retries. For example, to mark test names containing "test_e2e" as requiring retries:

[[profile.default.overrides]]

filter = 'test(test_e2e)'

retries = 2

Per-test overrides support the full set of delay and backoff options as well. For example:

[[profile.default.overrides]]

filter = 'test(test_remote_api)'

retries = { backoff = "exponential", count = 2, delay = "5s", jitter = true }

Note: The

--retriescommand-line option and theNEXTEST_RETRIESenvironment variable both disable overrides.

JUnit support

Flaky test detection is integrated with nextest's JUnit support. For more information, see JUnit support.

Slow tests and timeouts

Slow tests can bottleneck your test run. Nextest identifies tests that take more than a certain amount of time, and optionally lets you terminate tests that take too long to run.

Slow tests

For tests that take more than a certain amount of time (by default 60 seconds), nextest prints out a SLOW status. For example, in the output below, test_slow_timeout takes 90 seconds to execute and is marked as a slow test.

Starting 6 tests across 8 binaries (19 skipped)

PASS [ 0.001s] nextest-tests::basic test_success

PASS [ 0.001s] nextest-tests::basic test_success_should_panic

PASS [ 0.001s] nextest-tests::other other_test_success

PASS [ 0.001s] nextest-tests tests::unit_test_success

PASS [ 1.501s] nextest-tests::basic test_slow_timeout_2

SLOW [> 60.000s] nextest-tests::basic test_slow_timeout

PASS [ 90.001s] nextest-tests::basic test_slow_timeout

------------

Summary [ 90.002s] 6 tests run: 6 passed (1 slow), 19 skipped

Configuring timeouts

To customize how long it takes before a test is marked slow, you can use the slow-timeout configuration parameter. For example, to set a timeout of 2 minutes before a test is marked slow, add this to .config/nextest.toml:

[profile.default]

slow-timeout = "2m"

Nextest uses the humantime parser: see its documentation for the full supported syntax.

Terminating tests after a timeout

Nextest lets you optionally specify a number of slow-timeout periods after which a test is terminated. For example, to configure a slow timeout of 30 seconds and for tests to be terminated after 120 seconds (4 periods of 30 seconds), add this to .config/nextest.toml:

[profile.default]

slow-timeout = { period = "30s", terminate-after = 4 }

Example

The run below is configured with:

slow-timeout = { period = "1s", terminate-after = 2 }

Starting 5 tests across 8 binaries (20 skipped)

PASS [ 0.001s] nextest-tests::basic test_success

PASS [ 0.001s] nextest-tests::basic test_success_should_panic

PASS [ 0.001s] nextest-tests::other other_test_success

PASS [ 0.001s] nextest-tests tests::unit_test_success

SLOW [> 1.000s] nextest-tests::basic test_slow_timeout

SLOW [> 2.000s] nextest-tests::basic test_slow_timeout

TIMEOUT [ 2.001s] nextest-tests::basic test_slow_timeout

--- STDOUT: nextest-tests::basic test_slow_timeout ---

running 1 test

------------

Summary [ 2.001s] 5 tests run: 4 passed, 1 timed out, 20 skipped

How nextest terminates tests

On Unix platforms, nextest creates a process group for each test. On timing out, nextest attempts a graceful shutdown: it first sends the SIGTERM signal to the process group, then waits for a grace period (by default 10 seconds) for the test to shut down. If the test doesn't shut itself down within that time, nextest sends SIGKILL (kill -9) to the process group to terminate it immediately.

To customize the grace period, use the slow-timeout.grace-period configuration setting. For example, with the ci profile, to terminate tests after 5 minutes with a grace period of 30 seconds:

[profile.ci]

slow-timeout = { period = "60s", terminate-after = 5, grace-period = "30s" }

To send SIGKILL to a process immediately, without a grace period, set slow-timeout.grace-period to zero:

[profile.ci]

slow-timeout = { period = "60s", terminate-after = 5, grace-period = "0s" }

Note: Starting nextest 0.9.61, the

slow-timeout.grace-periodsetting is also applied to terminations due to Ctrl-C or other signals. With older versions, nextest always waits 10 seconds before sending SIGKILL.

On other platforms including Windows, nextest terminates the test immediately in a manner akin to SIGKILL. (On Windows, nextest uses job objects to kill the test process and all its descendants.) The slow-timeout.grace-period configuration setting is ignored.

Per-test overrides

Nextest supports per-test overrides for the slow-timeout and terminate-after settings.

For example, some end-to-end tests might take longer to run and sometimes get stuck. For tests containing the substring test_e2e, to configure a slow timeout of 120 seconds, and to terminate tests after 10 minutes:

[[profile.default.overrides]]

filter = 'test(test_e2e)'

slow-timeout = { period = "120s", terminate-after = 5 }

See Override precedence for more about the order in which overrides are evaluated.

Leaky tests

Some tests create subprocesses but may not clean them up properly. Typical scenarios include:

- A test creates a server process to test against, but does not shut it down at the end of the test.

- A test starts a subprocess with the intent to shut it down, but panics, and does not use the RAII pattern to clean up subprocesses.

- Note that

std::process::Childdoes not kill subprocesses on being dropped. Some alternatives, such astokio::process::Command, can be configured to do so.

- Note that

- This can happen transitively as well: a test creates a process which creates its own subprocess, and so on.

Nextest can detect some, but not all, such situations. If nextest detects a subprocess leak, it marks the corresponding test as leaky.

Leaky tests nextest detects

Currently, nextest is limited to detecting subprocesses that inherit standard output or standard error from the test. For example, here's a test that nextest will mark as leaky.

#![allow(unused)] fn main() { #[test] fn test_subprocess_doesnt_exit() { let mut cmd = std::process::Command::new("sleep"); cmd.arg("120"); cmd.spawn().unwrap(); } }

For this test, nextest will output something like:

Starting 1 tests across 8 binaries (24 skipped)

LEAK [ 0.103s] nextest-tests::basic test_subprocess_doesnt_exit

------------

Summary [ 0.103s] 1 tests run: 1 passed (1 leaky), 24 skipped

Leaky tests that are otherwise successful are considered to have passed.

Leaky tests that nextest currently does not detect

Tests which spawn subprocesses that do not inherit either standard output or standard error are not currently detected by nextest. For example, the following test is not currently detected as leaky:

#![allow(unused)] fn main() { #[test] fn test_subprocess_doesnt_exit_2() { let mut cmd = std::process::Command::new("sleep"); cmd.arg("120") .stdout(std::process::Stdio::null()) .stderr(std::process::Stdio::null()); cmd.spawn().unwrap(); } }

Detecting such tests is a very difficult problem to solve, particularly on Unix platforms.

Note: This section is not part of nextest's stability guarantees. In the future, these tests might get marked as leaky by nextest.

Configuring the leak timeout

Nextest waits a specified amount of time (by default 100 milliseconds) after the test exits for standard output and standard error to be closed. In rare cases, you may need to configure the leak timeout.

To do so, use the leak-timeout configuration parameter. For example, to wait up to 500 milliseconds after the test exits, add this to .config/nextest.toml:

[profile.default]

leak-timeout = "500ms"

Nextest also supports per-test overrides for the leak timeout.

Filter expressions

Nextest supports a domain-specific language (DSL) for filtering tests. The DSL is inspired by, and is similar to, Bazel query and Mercurial revsets.

Example: Running all tests in a crate and its dependencies

To run all tests in my-crate and its dependencies, run:

cargo nextest run -E 'deps(my-crate)'

The argument passed into the -E command-line option is called a filter expression. The rest of this page describes the full syntax for the expression DSL.

The filter expression DSL

A filter expression defines a set of tests. A test will be run if it matches a filter expression.

On the command line, multiple filter expressions can be passed in. A test will be run if it matches any of these expressions. For example, to run tests whose names contain the string my_test as well as all tests in package my-crate, run:

cargo nextest run -E 'test(my_test)' -E 'package(my-crate)'

This is equivalent to:

cargo nextest run -E 'test(my_test) + package(my-crate)'

Examples

package(serde) and test(deserialize): every test containing the stringdeserializein the packageserdedeps(nextest*): all tests in packages whose names start withnextest, and all of their (possibly transitive) dependenciesnot (test(/parse[0-9]*/) | test(run)): every test name not matching the regexparse[0-9]*or the substringrun

Note: If you pass in both a filter expression and a standard, substring-based filter, tests must match both filter expressions and substring-based filters.

For example, the command:

cargo nextest run -E 'package(foo)' -- test_bar test_bazwill run all tests that are both in package

fooand matchtest_barortest_baz.

DSL reference

This section contains the full set of operators supported by the DSL.

Basic predicates

all(): include all tests.test(name-matcher): include all tests matchingname-matcher.package(name-matcher): include all tests in packages (crates) matchingname-matcher.deps(name-matcher): include all tests in crates matchingname-matcher, and all of their (possibly transitive) dependencies.rdeps(name-matcher): include all tests in crates matchingname-matcher, and all the crates that (possibly transitively) depend onname-matcher.kind(name-matcher): include all tests in binary kinds matchingname-matcher. Binary kinds include:libfor unit tests, typically in thesrc/directorytestfor integration tests, typically in thetests/directorybenchfor benchmark testsbinfor tests within[[bin]]targetsproc-macrofor tests in thesrc/directory of a procedural macro

binary(name-matcher): include all tests in binary names matchingname-matcher.- For tests of kind

libandproc-macro, the binary name is the same as the name of the crate. - Otherwise, it's the name of the integration test, benchmark, or binary target.

- For tests of kind

binary_id(name-matcher): include all tests in binary IDs matchingname-matcher.platform(host)orplatform(target): include all tests that are built for the host or target platform, respectively.none(): include no tests.

Note: If a filter expression always excludes a particular binary, it will not be run, even to get the list of tests within it. This means that a command like:

cargo nextest list -E 'platform(host)'will not execute any test binaries built for the target platform. This is generally what you want, but if you would like to list tests anyway, include a

test()predicate. For example, to list test binaries for the target platform (using, for example, a target runner), but skip running them:cargo nextest list -E 'platform(host) + not test(/.*/)' --verbose

Name matchers

=string: equality matcher—match a package or test name that's equal tostring.~string: contains matcher—match a package or test name containingstring./regex/: regex matcher—match a package or test name if any part of it matches the regular expressionregex. To match the entire string against a regular expression, use/^regex$/. The implementation uses the regex crate.#glob: glob matcher—match a package or test name if the full name matches the glob expressionglob. The implementation uses the globset crate.string: Default matching strategy.- For

test()predicates, this is the contains matcher, equivalent to~string. - For package-related predicates (

package(),deps(), andrdeps()), this is the glob matcher, equivalent to#string. - For binary-related predicates (

binary()andbinary_id()), this is also the glob matcher. - For

kind()andplatform(), this is the equality matcher, equivalent to=string.

- For

If you're constructing an expression string programmatically, always use a prefix to avoid ambiguity.

Escape sequences

The equality, contains, and glob matchers can contain escape sequences, preceded by a

backslash (\).

\n: line feed\r: carriage return\t: tab\\: backslash\/: forward slash\): closing parenthesis\,: comma\u{7FFF}: 24-bit Unicode character code (up to 6 hex digits)

For the glob matcher, to match against a literal glob metacharacter such as * or ?, enclose it in square brackets: [*] or [?].

All other escape sequences are invalid.

The regular expression matcher supports the same escape sequences that the regex crate does. This includes character classes like \d. Additionally, \/ is interpreted as an escaped /.

Operators

set_1 & set_2,set_1 and set_2: the intersection ofset_1andset_2set_1 | set_2,set_1 + set_2,set_1 or set_2: the union ofset_1orset_2not set,!set: include everything not included insetset_1 - set_2: equivalent toset_1 and not set_2(set): include everything inset

Operator precedence

In order from highest to lowest, or in other words from tightest to loosest binding:

()not,!and,&,-or,|,+

Within a precedence group, operators bind from left to right.

Examples

test(a) & test(b) | test(c)is equivalent to(test(a) & test(b)) | test(c).test(a) | test(b) & test(c)is equivalent totest(a) | (test(b) & test(c)).test(a) & test(b) - test(c)is equivalent to(test(a) & test(b)) - test(c).not test(a) | test(b)is equivalent to(not test(a)) | test(b).

More information about filter expressions

This section covers additional information that is of interest to nextest's developers and curious readers.

Click to expand

Motivation

Developer tools often work with some notion of sets, and many of them have grown some kind of domain-specific query language to be able to efficiently specify those sets.

The biggest advantage of a query language is orthogonality: rather than every command having to grow a number of options such as --include and --exclude, developers can learn the query language once and use it everywhere.

Design decisions

Nextest's filter expressions are meant to be specified at the command line as well as in configuration. This led to the following design decisions:

- No quotes: Filter expressions do not have embedded quotes. This lets users use either single (

'') or double quotes ("") to specify filter expressions, without having to worry about escaping them. - Minimize nesting of parens: If an expression language uses parentheses or other brackets heavily (e.g. Rust's

cfg()expressions), getting them wrong can be annoying when trying to write an expression. Text editors typically highlight matching and missing parens, but there's so such immediate feedback on the command line. - Infix operators: Nextest's filter expressions use infix operators, which are more natural to read and write for most people. (As an alternative, Rust's

cfg()expressions use the prefix operatorsall()andany()). - Operator aliases: Operators are supported as both words (

and,or,not) and symbols (&,|,+,-,!), letting users write expressions in the style most natural to them. Filter expressions are a small language, so there's no need to be particularly opinionated.

Archiving and reusing builds

In some cases, it can be useful to separate out building tests from running them. Nextest supports archiving builds on one machine, and then extracting the archive to run tests on another machine.

Terms

- Build machine: The computer that builds tests.

- Target machine: The computer that runs tests.

Use cases

- Cross-compilation. The build machine has a different architecture, or runs a different operating system, from the target machine.

- Test partitioning. Build once on the build machine, then partition test execution across multiple target machines.

- Saving execution time on more valuable machines. For example, build tests on a regular machine, then run them on a machine with a GPU attached to it.

Requirements

- The project source must be checked out to the same revision on the target machine. This might be needed for test fixtures and other assets, and nextest sets the right working directory relative to the workspace root when executing tests.

- It is your responsibility to transfer over the archive. Use the examples below as a template.

- Nextest must be installed on the target machine. For best results, use the same version of nextest on both machines.

Non-requirements

- Cargo does not need to be installed on the target machine. If

cargois unavailable, replacecargo nextestwithcargo-nextest nextestin the following examples.

Creating archives

cargo nextest archive --archive-file <name-of-archive.tar.zst> creates an archive with the following contents:

-

Cargo-related metadata, at the location

target/nextest/cargo-metadata.json. -

Metadata about test binaries, at the location

target/nextest/binaries-metadata.json. -

All test binaries

-

Other relevant files:

-

Dynamic libraries that test binaries might link to

-

Non-test binaries used by integration tests

-

Starting nextest 0.9.66, build script output directories for workspace packages that have associated test binaries

NOTE: Currently,

OUT_DIRs are only archived one level deep to avoid bloating archives too much. In the future, we may add configuration to archive more or less of the output directory. If you have a use case that would benefit from this, please file an issue.

-

Note that archives do not include the source code for your project. It is your responsibility to ensure that the source code for your workspace is transferred over to the target machine and has the same contents.

Currently, the only format supported is a Zstandard-compressed tarball (.tar.zst).

Running tests from archives

cargo nextest list and run support a new --archive-file option. This option accepts archives created by cargo nextest archive as above.

By default, archives are extracted to a temporary directory, and nextest remaps paths to use the new

target directory. To specify the directory archives should be extracted to, use the --extract-to

option.

Specifying a new location for the source code

By default, nextest expects the workspace's source code to be in the same location on both the build and target machines. To specify a new location for the workspace, use the --workspace-remap <path-to-workspace-root> option with the list or run commands.

Example: Simple build/run split

-

Build and archive tests:

Here you should specify all the options you would normally use to build your tests.

cargo nextest archive --workspace --all-features --archive-file my-archive.tar.zst -

Run the tests:

Archive keeps the options used to build the tests, so you should not specify them again.

cargo nextest run --archive-file my-archive.tar.zst

Example: Use in GitHub Actions

See this working example for how to reuse builds and partition test runs on GitHub Actions.

Example: Cross-compilation

While cross-compiling code, some tests may need to be run on the host platform. (See the note about Filtering by build platform for more.)

On the build machine

-

Build and run host-only tests:

cargo nextest run --target <TARGET> -E 'platform(host)' -

Archive tests:

cargo nextest archive --target <TARGET> --archive-file my-archive.tar.zst -

Copy

my-archive.tar.zstto the target machine.

On the target machine

-

Check out the project repository to a path

<REPO-PATH>, to the same revision as the build machine. -

List target-only tests:

cargo nextest list -E 'platform(target)' \ --archive-file my-archive.tar.zst \ --workspace-remap <REPO-PATH> -

Run target-only tests:

cargo nextest run -E 'platform(target)' \ --archive-file my-archive.tar.zst \ --workspace-remap <REPO-PATH>

Manually creating your own archives

You can also create and manage your own archives, with the following options to cargo nextest list and run:

--binaries-metadata: The path to JSON metadata generated bycargo nextest list --list-type binaries-only --message-format json.--target-dir-remap: A possible new location for the target directory. Requires--binaries-metadata.--cargo-metadata: The path to JSON metadata generated bycargo metadata --format-version 1.

Making tests relocatable

Some tests may need to be modified to handle changes in the workspace and target directories. Some common situations:

-

To obtain the path to the source directory, Cargo provides the

CARGO_MANIFEST_DIRoption at both build time and runtime. For relocatable tests, use the value ofCARGO_MANIFEST_DIRat runtime. This meansstd::env::var("CARGO_MANIFEST_DIR"), notenv!("CARGO_MANIFEST_DIR").If the workspace is remapped, nextest automatically sets

CARGO_MANIFEST_DIRto the new location. -

To obtain the path to a crate's executables, Cargo provides the

CARGO_BIN_EXE_<name>option to integration tests at build time. To handle target directory remapping, use the value ofNEXTEST_BIN_EXE_<name>at runtime.To retain compatibility with

cargo test, you can fall back to the value ofCARGO_BIN_EXE_<name>at build time.

Options and arguments for cargo nextest archive

Build and archive tests

Usage: cargo nextest archive [OPTIONS] --archive-file <PATH>

Options:

-v, --verbose Verbose output [env: NEXTEST_VERBOSE=]

--color <WHEN> Produce color output: auto, always, never [env: CARGO_TERM_COLOR=] [default: auto]

-h, --help Print help (see more with '--help')

Package selection:

-p, --package <PACKAGES> Package to test

--workspace Test all packages in the workspace

--exclude <EXCLUDE> Exclude packages from the test

--all Alias for --workspace (deprecated)

Target selection:

--lib Test only this package's library unit tests

--bin <BIN> Test only the specified binary

--bins Test all binaries

--example <EXAMPLE> Test only the specified example

--examples Test all examples

--test <TEST> Test only the specified test target

--tests Test all targets

--bench <BENCH> Test only the specified bench target

--benches Test all benches

--all-targets Test all targets

Feature selection:

-F, --features <FEATURES> Space or comma separated list of features to activate

--all-features Activate all available features

--no-default-features Do not activate the `default` feature

Compilation options:

--build-jobs <N> Number of build jobs to run

-r, --release Build artifacts in release mode, with optimizations

--cargo-profile <NAME> Build artifacts with the specified Cargo profile

--target <TRIPLE> Build for the target triple

--target-dir <DIR> Directory for all generated artifacts

--unit-graph Output build graph in JSON (unstable)

--timings[=<FMTS>] Timing output formats (unstable) (comma separated): html, json

Manifest options:

--manifest-path <PATH> Path to Cargo.toml

--frozen Require Cargo.lock and cache are up to date

--locked Require Cargo.lock is up to date

--offline Run without accessing the network

Other Cargo options:

--cargo-quiet Do not print cargo log messages

--cargo-verbose... Use cargo verbose output (specify twice for very verbose/build.rs output)

--ignore-rust-version Ignore `rust-version` specification in packages

--future-incompat-report Outputs a future incompatibility report at the end of the build

--config <KEY=VALUE> Override a configuration value

-Z <FLAG> Unstable (nightly-only) flags to Cargo, see 'cargo -Z help' for details

Archive options:

--archive-file <PATH> File to write archive to

--archive-format <FORMAT> Archive format [default: auto] [possible values: auto, tar-zst]

--zstd-level <LEVEL> Zstandard compression level (-7 to 22, higher is more compressed + slower) [default: 0]

Config options:

--config-file <PATH>

Config file [default: workspace-root/.config/nextest.toml]

--tool-config-file <TOOL:ABS_PATH>

Tool-specific config files

--override-version-check

Override checks for the minimum version defined in nextest's config

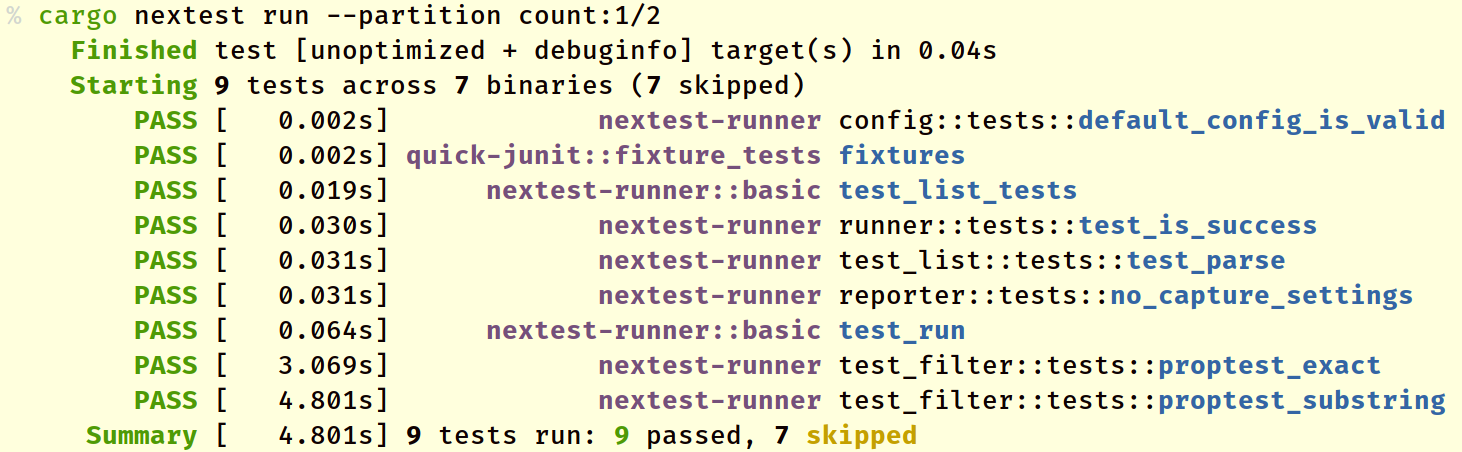

Partitioning test runs in CI

For CI scenarios where test runs take too long on a single machine, nextest supports automatically partitioning or sharding tests into buckets, using the --partition option.

cargo-nextest supports two kinds of partitioning: counted and hashed.

Counted partitioning

Counted partitioning is specified with --partition count:m/n, where m and n are both integers, and 1 ≤ m ≤ n. Specifying this operator means "run tests in count-based bucket m of n".

Here's an example of running tests in bucket 1 of 2:

Tests not in the current bucket are marked skipped.

Counted partitioning is done per test binary. This means that the tests in one binary do not influence counting for other binaries.

Counted partitioning also applies after all other test filters. For example, if you specify cargo nextest run --partition count:1/3 test_parsing, nextest first selects tests that match the substring test_parsing, then buckets this subset of tests into 3 partitions and runs the tests in partition 1.

Hashed sharding

Hashed sharding is specified with --partition hash:m/n, where m and n are both integers, and 1 ≤ m ≤ n. Specifying this operator means "run tests in hashed bucket m of n".

The main benefit of hashed sharding is that it is completely deterministic (the hash is based on a combination of the binary and test names). Unlike with counted partitioning, adding or removing tests, or changing test filters, will never cause a test to fall into a different bucket. The hash algorithm is guaranteed never to change within a nextest version series.

For sufficiently large numbers of tests, hashed sharding produces roughly the same number of tests per bucket. However, smaller test runs may result in an uneven distribution.

Reusing builds

By default, each job has to do its own build before starting a test run. To save on the extra work, nextest supports archiving builds in one job for later reuse in other jobs. See the example below for how to do this.

Example: Use in GitHub Actions

See this working example for how to reuse builds and partition test runs on GitHub Actions.

Example: Use in GitLab CI

GitLab can parallelize jobs across runners. This works neatly with --partition. For example:

test:

stage: test

parallel: 3

script:

- echo "Node index - ${CI_NODE_INDEX}. Total amount - ${CI_NODE_TOTAL}"

- time cargo nextest run --workspace --partition count:${CI_NODE_INDEX}/${CI_NODE_TOTAL}

This creates three jobs that run in parallel: test 1/3, test 2/3 and test 3/3.

Target runners

If you're cross-compiling Rust code, you may wish to run tests through a wrapper executable or script. For this purpose, nextest supports target runners, using the same configuration options used by Cargo:

- The environment variable

CARGO_TARGET_<triple>_RUNNER, if it matches the target platform, takes highest precedence. - Otherwise, nextest reads the

target.<triple>.runnerandtarget.<cfg>.runnersettings from.cargo/config.toml.

Example

If you're on Linux cross-compiling to Windows, you can choose to run tests through Wine.

If you add the following to .cargo/config.toml:

[target.x86_64-pc-windows-msvc]

runner = "wine"

Or, in your shell:

export CARGO_TARGET_X86_64_PC_WINDOWS_MSVC_RUNNER=wine

Then, running this command will cause your tests to be run as wine <test-binary>:

cargo nextest run --target x86_64-pc-windows-msvc

Note: If your target runner is a shell script, it might malfunction on macOS due to System Integrity Protection's environment sanitization. Nextest provides the

NEXTEST_LD_*andNEXTEST_DYLD_*environment variables as workarounds: see Environment variables nextest sets for more.

Cross-compiling

While cross-compiling code, some tests may need to be run on the host platform. (See the note about Filtering by build platform for more.)

For tests that run on the host platform, nextest uses the target runner defined for the host. For example, if cross-compiling from x86_64-unknown-linux-gnu to x86_64-pc-windows-msvc, nextest will use the CARGO_TARGET_X86_64_UNKNOWN_LINUX_GNU_RUNNER for proc-macro and other host-only tests, and CARGO_TARGET_X86_64_PC_WINDOWS_MSVC_RUNNER for other tests.

This behavior is similar to that of per-test overrides.

Debugging output

Nextest invokes target runners during both the list and run phases. During the list phase, nextest has stringent rules for the contents of standard output.

If a target runner produces debugging or any other kind of output, it MUST NOT go to standard output. You can produce output to standard error, to a file on disk, etc.

For example, this target runner will not work:

#!/bin/bash

echo "This is some debugging output"

$@

Instead, redirect debugging output to standard error:

#!/bin/bash

echo "This is some debugging output" >&2

$@

Other options

Some other options accepted by cargo nextest run. Many of these options are also accepted as configuration settings and environment variables.

Runner options

--no-fail-fast: do not exit the test run on the first failure. Most useful for CI scenarios.-j, --test-threads: number of tests to run simultaneously. Note that this is separate from the number of build jobs to run simultaneously, which is specified by--build-jobs.--run-ignored ignored-onlyruns ignored tests, while--run-ignored allruns both ignored and non-ignored tests.

Reporter options

--success-output and --failure-output

These options control when standard output and standard error are displayed for failing and passing tests, respectively. The possible values are:

immediate: display output as soon as the test fails. Default for--failure-output.final: display output at the end of the test run.immediate-final: display output as soon as the test fails, and at the end of the run. This is most useful for CI jobs.never: never display output. Default for--success-output.

These options can also be configured via global configuration and per-test overrides. Specifying these options over the command line will override configuration settings.

--status-level and --final-status-level

--status-level: which test statuses (PASS, FAIL etc) to display. There are 7 status levels:none, fail, retry, slow, pass, skip, all. Each status level causes all earlier status levels to be displayed as well (similar to log levels). (For example, settingstatus-leveltoskipwill show failing, retried, slow and passing tests along with skipped tests.) The default ispass.--final-status-level: which test statuses to display at the end of a test run. For example, this can be set tofailto print out a list of failing tests at the end of a test run. The default isnone.

For a full list of options, see Options and arguments.

Machine-readable output

cargo-nextest can be configured to produce machine-readable JSON output, readable by other programs. The nextest-metadata crate provides a Rust interface to deserialize the output to. (The same crate is used by nextest to generate the output.)

Listing tests

To produce a list of tests using the JSON output, use cargo nextest list --message-format json (or json-pretty for nicely formatted output). Here's some example output for camino:

% cargo nextest list --all-features --lib --message-format json-pretty

{

"rust-build-meta": {

"target-directory": "/home/rain/dev/camino/target",

"base-output-directories": [

"debug"

],

"non-test-binaries": {},

"build-script-out-dirs": {

"camino 1.1.6 (path+file:///home/rain/dev/camino)": "debug/build/camino-02991de38c555ca1/out"

},

"linked-paths": [],

"target-platforms": [

{

"triple": "x86_64-unknown-linux-gnu",

"target-features": [

"fxsr",

"sse",

"sse2"

]

}

],

"target-platform": null

},

"test-count": 5,

"rust-suites": {

"camino": {

"package-name": "camino",

"binary-id": "camino",

"binary-name": "camino",

"package-id": "camino 1.1.6 (path+file:///home/rain/dev/camino)",

"kind": "lib",

"binary-path": "/home/rain/dev/camino/target/debug/deps/camino-1bdca073ddd4474a",

"build-platform": "target",

"cwd": "/home/rain/dev/camino",

"status": "listed",

"testcases": {

"serde_impls::tests::invalid_utf8": {

"ignored": false,

"filter-match": {

"status": "matches"

}

},

"serde_impls::tests::valid_utf8": {

"ignored": false,

"filter-match": {

"status": "matches"

}

},

"tests::test_borrowed_into": {

"ignored": false,

"filter-match": {

"status": "matches"

}

},

"tests::test_deref_mut": {

"ignored": false,

"filter-match": {

"status": "matches"

}

},

"tests::test_owned_into": {

"ignored": false,

"filter-match": {

"status": "matches"

}

}

}

}

}

}

The value of "package-id" can be matched up to the package IDs produced by running cargo metadata.

Running tests

This is currently an experimental feature. For more information, see Machine-readable output for test runs.

Configuration

cargo-nextest supports repository-specific configuration at the location .config/nextest.toml from the Cargo workspace root. The location of the configuration file can be overridden with the --config-file option.

The default configuration shipped with cargo-nextest is:

# This is the default config used by nextest. It is embedded in the binary at

# build time. It may be used as a template for .config/nextest.toml.

[store]

# The directory under the workspace root at which nextest-related files are

# written. Profile-specific storage is currently written to dir/<profile-name>.

dir = "target/nextest"

# This section defines the default nextest profile. Custom profiles are layered

# on top of the default profile.

[profile.default]

# "retries" defines the number of times a test should be retried. If set to a

# non-zero value, tests that succeed on a subsequent attempt will be marked as

# flaky. Can be overridden through the `--retries` option.

# Examples

# * retries = 3

# * retries = { backoff = "fixed", count = 2, delay = "1s" }

# * retries = { backoff = "exponential", count = 10, delay = "1s", jitter = true, max-delay = "10s" }

retries = 0

# The number of threads to run tests with. Supported values are either an integer or

# the string "num-cpus". Can be overridden through the `--test-threads` option.

test-threads = "num-cpus"

# The number of threads required for each test. This is generally used in overrides to

# mark certain tests as heavier than others. However, it can also be set as a global parameter.

threads-required = 1

# Show these test statuses in the output.

#

# The possible values this can take are:

# * none: no output

# * fail: show failed (including exec-failed) tests

# * retry: show flaky and retried tests

# * slow: show slow tests

# * pass: show passed tests

# * skip: show skipped tests (most useful for CI)

# * all: all of the above

#

# Each value includes all the values above it; for example, "slow" includes

# failed and retried tests.

#

# Can be overridden through the `--status-level` flag.

status-level = "pass"

# Similar to status-level, show these test statuses at the end of the run.

final-status-level = "flaky"

# "failure-output" defines when standard output and standard error for failing tests are produced.

# Accepted values are

# * "immediate": output failures as soon as they happen

# * "final": output failures at the end of the test run

# * "immediate-final": output failures as soon as they happen and at the end of

# the test run; combination of "immediate" and "final"

# * "never": don't output failures at all

#

# For large test suites and CI it is generally useful to use "immediate-final".

#

# Can be overridden through the `--failure-output` option.

failure-output = "immediate"

# "success-output" controls production of standard output and standard error on success. This should

# generally be set to "never".

success-output = "never"

# Cancel the test run on the first failure. For CI runs, consider setting this

# to false.

fail-fast = true

# Treat a test that takes longer than the configured 'period' as slow, and print a message.

# See <https://nexte.st/book/slow-tests> for more information.

#

# Optional: specify the parameter 'terminate-after' with a non-zero integer,

# which will cause slow tests to be terminated after the specified number of

# periods have passed.

# Example: slow-timeout = { period = "60s", terminate-after = 2 }

slow-timeout = { period = "60s" }

# Treat a test as leaky if after the process is shut down, standard output and standard error

# aren't closed within this duration.

#

# This usually happens in case of a test that creates a child process and lets it inherit those

# handles, but doesn't clean the child process up (especially when it fails).

#

# See <https://nexte.st/book/leaky-tests> for more information.

leak-timeout = "100ms"

# `nextest archive` automatically includes any build output required by a standard build.

# However sometimes extra non-standard files are required.

# To address this "archive-include" specifies additional paths that will be included in the archive.

archive-include = [

# Examples:

#

# { path = "application-data", relative-to = "target" },

# { path = "data-from-some-dependency/file.txt", relative-to = "target" },

#

# In the above example:

# * the directory and its contents at "target/application-data" will be included recursively in the archive.

# * the file "target/data-from-some-dependency/file.txt" will be included in the archive.

]

[profile.default.junit]

# Output a JUnit report into the given file inside 'store.dir/<profile-name>'.

# If unspecified, JUnit is not written out.

# path = "junit.xml"

# The name of the top-level "report" element in JUnit report. If aggregating

# reports across different test runs, it may be useful to provide separate names

# for each report.

report-name = "nextest-run"

# Whether standard output and standard error for passing tests should be stored in the JUnit report.

# Output is stored in the <system-out> and <system-err> elements of the <testcase> element.

store-success-output = false

# Whether standard output and standard error for failing tests should be stored in the JUnit report.

# Output is stored in the <system-out> and <system-err> elements of the <testcase> element.

#

# Note that if a description can be extracted from the output, it is always stored in the

# <description> element.

store-failure-output = true

# This profile is activated if MIRI_SYSROOT is set.

[profile.default-miri]

Profiles

With cargo-nextest, local and CI runs often need to use different settings. For example, CI test runs should not be cancelled as soon as the first test failure is seen.

cargo-nextest supports multiple profiles, where each profile is a set of options for cargo-nextest. Profiles are selected on the command line with the -P or --profile option. Most individual configuration settings can also be overridden at the command line.

Here is a recommended profile for CI runs:

[profile.ci]

# Print out output for failing tests as soon as they fail, and also at the end

# of the run (for easy scrollability).

failure-output = "immediate-final"

# Do not cancel the test run on the first failure.

fail-fast = false

After checking the profile into .config/nextest.toml, use cargo nextest --profile ci in your CI runs.

Note: Nextest's embedded configuration may define new profiles whose names start with

default-in the future. To avoid backwards compatibility issues, do not name custom profiles starting withdefault-.

Tool-specific configuration

Some tools that integrate with nextest may wish to customize nextest's defaults. However, in most cases, command-line arguments and repository-specific configuration should still override those defaults.

To support these tools, nextest supports the --tool-config-file argument. Values to this argument are specified in the form tool:/path/to/config.toml. For example, if your tool my-tool needs to call nextest with customized defaults, it should run:

cargo nextest run --tool-config-file my-tool:/path/to/my/config.toml

The --tool-config-file argument may be specified multiple times. Config files specified earlier are higher priority than those that come later.

Hierarchical configuration

For this example:

Configuration is resolved in the following order:

-

Command-line arguments. For example, if

--retries=3is specified on the command line, failing tests are retried up to 3 times. -

Environment variables. For example, if

NEXTEST_RETRIES=4is specified on the command line, failing tests are retried up to 4 times. -

Per-test overrides, if they're supported for this configuration variable.

-

If a profile is specified, profile-specific configuration in

.config/nextest.toml. For example, if the repository-specific configuration looks like:[profile.ci] retries = 2then, if

--profile ciis selected, failing tests are retried up to 2 times. -

If a profile is specified, tool-specific configuration for the given profile.

-

Repository-specific configuration for the

defaultprofile. For example, if the repository-specific configuration looks like:[profile.default] retries = 5then failing tests are retried up to 5 times.

-

Tool-specific configuration for the

defaultprofile. -

The default configuration listed above, which is that tests are never retried.

Environment variables

This section contains information about the environment variables nextest reads and sets.

Environment variables nextest reads

Nextest reads some of its command-line options as environment variables. In all cases, passing in a command-line option overrides the respective environment variable.

NEXTEST_PROFILE— Nextest profile to use while running tests.NEXTEST_TEST_THREADS— Number of tests to run simultaneously.NEXTEST_RETRIES— Number of times to retry running tests.NEXTEST_HIDE_PROGRESS_BAR— If set to "1", always hide the progress bar.NEXTEST_FAILURE_OUTPUTandNEXTEST_SUCCESS_OUTPUT— When standard output and standard error are displayed for failing and passing tests, respectively. See Reporter options for possible values.NEXTEST_STATUS_LEVEL— Which test statuses (PASS, FAIL etc) to display. See Reporter options for possible values.NEXTEST_FINAL_STATUS_LEVEL— Which test statuses (PASS, FAIL etc) to display at the end of a test run. See Reporter options for possible values.NEXTEST_VERBOSE— Verbose output.

Nextest also reads the following environment variables to emulate Cargo's behavior.

CARGO— Path to thecargobinary to use for builds.CARGO_TARGET_DIR— Location of where to place all generated artifacts, relative to the current working directory.CARGO_TARGET_<triple>_RUNNER— Support for target runners.CARGO_TERM_COLOR— The default color mode:always,autoornever.

Cargo-related environment variables nextest reads

Nextest delegates to Cargo for the build, which recognizes a number of environment variables. See Environment variables Cargo reads for a full list.

Environment variables nextest sets

Nextest exposes these environment variables to your tests at runtime only. They are not set at build time because cargo-nextest may reuse builds done outside of the nextest environment.

-

NEXTEST— always set to"1". -

NEXTEST_RUN_ID— A UUID corresponding to a particular nextest run. All tests run via a particular invocation ofcargo nextest runwill have the same UUID. -

NEXTEST_EXECUTION_MODE— currently, always set toprocess-per-test. More options may be added in the future if nextest gains the ability to run all tests within the same process (#27). -